Imagine data as water flowing through a long network of pipes. At the source, the water is clear and sparkling. But as it travels, it collects sediments, leaks through cracks, and sometimes picks up unwanted chemicals. By the time it reaches the final glass, it may not resemble the pristine stream it once was. This gradual contamination is a quiet saboteur, and in the world of machine learning, this phenomenon is known as data entropy.

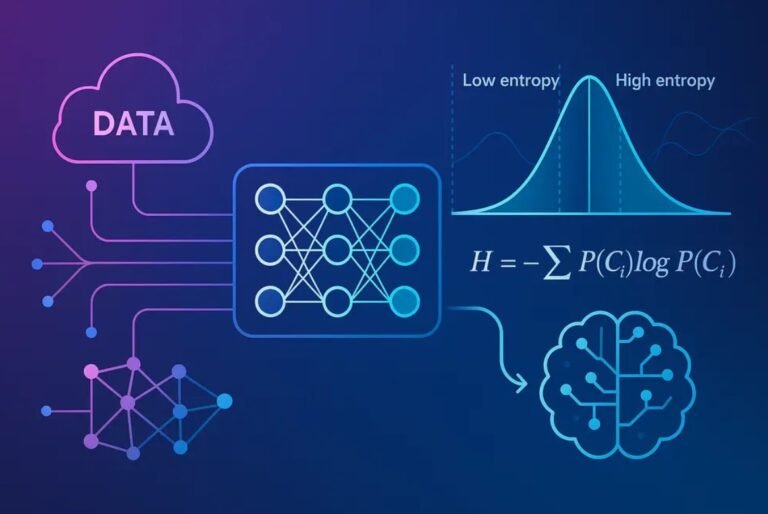

Instead of treating data entropy as a technical term alone, think of it as the slow fading of meaning. When data moves through collection, preprocessing, transformation, storage, modelling, and deployment, each step risks losing something vital. Understanding how and where this erosion happens allows us to protect the clarity of insights and the reliability of predictions.

One might explore this more deeply while studying structured learning paths such as a data science course in Pune, where discussions about data quality and modelling often take center stage.

The Journey Begins: When Clean Data Meets Reality

Data rarely enters an organization as a neat spreadsheet. It arrives messy, busy, noisy, and unpredictable. A sensor misfires, a user mistypes, a survey respondent leaves fields blank. Before the first algorithm even sees the data, it has already begun drifting away from pure meaning.

This is the earliest stage where entropy takes root. Every missing value, inconsistent format, or unverified record is like fine dust settling onto what could have been a clear window. Preprocessing steps such as normalization, imputation, and deduplication help, but each correction also introduces assumptions. And assumptions are subtle sculptors of meaning.

Whether we simplify a numeric scale, group categories, or remove outliers, we are making a trade between clarity and completeness. The challenge is not to eliminate entropy entirely, but to manage it with intention.

Feature Engineering: Creativity Meets Compromise

Feature engineering is often where models learn how to interpret the world. Yet this stage can unintentionally magnify entropy. When we select variables to keep or discard, we are essentially choosing the color filters through which the model will see reality.

Creating new features from existing ones can illuminate hidden patterns, but it can also distort the relationships in the data. For example:

- Combining age groups into broader bands can improve generalization, but at the cost of precision.

- Scaling values to fit model expectations may wash away meaningful variation.

- Encoding categories as numbers may accidentally imply ranking when none exists.

This stage feels artistic, but every brushstroke matters. The more layers of transformation added, the harder it becomes to trace back to the original truth.

Model Training: The Loss of Context

Models learn by compression. They attempt to distill the informative essence of data into a set of internal parameters. But compression comes at the price of detail. A model may learn correlations without understanding reasons, leading to brittle decision-making.

When the model attempts to generalize, entropy manifests as:

- Overfitting, where noise is mistaken for significance

- Underfitting, where complexity is ignored

- Concept drift, where current data no longer reflects future patterns

If the model never saw rainy days in training, it might assume the world is always sunny. The data was never technically wrong, but it was incomplete. Entropy whispers: “There was more to the story.”

Deployment and Real-World Chaos

Once a model is deployed, entropy accelerates. Data in motion is unpredictable. User behavior changes. Market conditions shift. Devices degrade. Context evolves.

A model trained on past information must constantly negotiate with a present it never fully met.

This is where monitoring and feedback loops become critical. If the system does not track shifts in incoming data, error rates will climb steadily and silently. Over time, even the best-designed models degrade, not because they were poorly built, but because reality refuses to stay still.

Organizations that fail to refresh their models regularly end up trusting predictions that no longer reflect the world outside their dashboards.

Guarding Against Entropy: A Mindful Discipline

Fighting entropy is not about eliminating information loss, but about slowing it down and making it visible. Strong data governance, careful pipeline design, documentation of transformations, and continuous monitoring are all essential.

In many advanced learning programs like a data science course in Pune, learners are encouraged to view the data lifecycle not just as a technical sequence but as a story that must be preserved with care.

Entropy does not announce itself loudly. It hides in subtle shifts, small assumptions, quiet drifts. Awareness is the most powerful defense.

Conclusion: Meaning Is the Real Asset

At its core, data work is not about numbers, algorithms, or dashboards. It is about meaning. When meaning fades, the systems we build become hollow. When we recognize entropy and learn to manage it, we protect the integrity of decisions, strategies, and outcomes.

In a world overflowing with data, clarity is more valuable than volume. The machine learning lifecycle is a journey where we must constantly guard the signal from being drowned by noise. Understanding data entropy reminds us that every step matters, and every decision shapes what the data ultimately reveals.